PROBLEM STATEMENT

Koverse is a Seattle based platform used to manage, normalize, and interpret large and complex data sets.

The original platform was built in 2012 with a focus on the extract, transform, load (ETL) process of combining and normalizing data.

Over the years as new features were added on to the platform, they had been added on in a siloed or piecemeal fashion. This made the tool work for one-off tasks but was not very effective for an end-to-end data processing workflow. Koverse was looking to remedy this.

APPROACH

Assessing the Tool to Redesign

User Research, Personas & Journeys

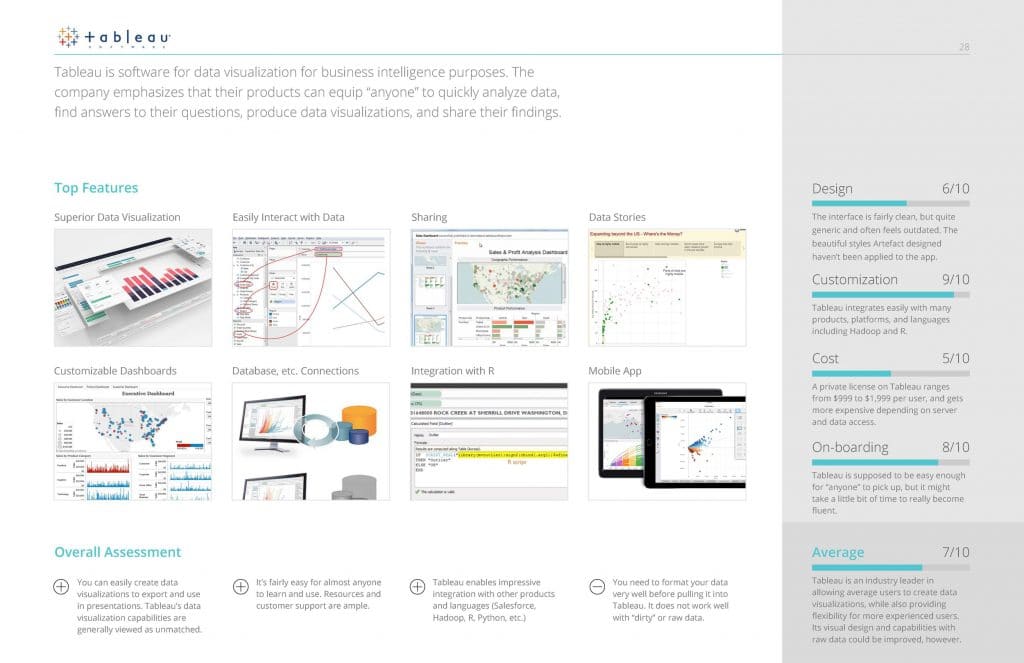

Competitive Analysis

Information Architecture

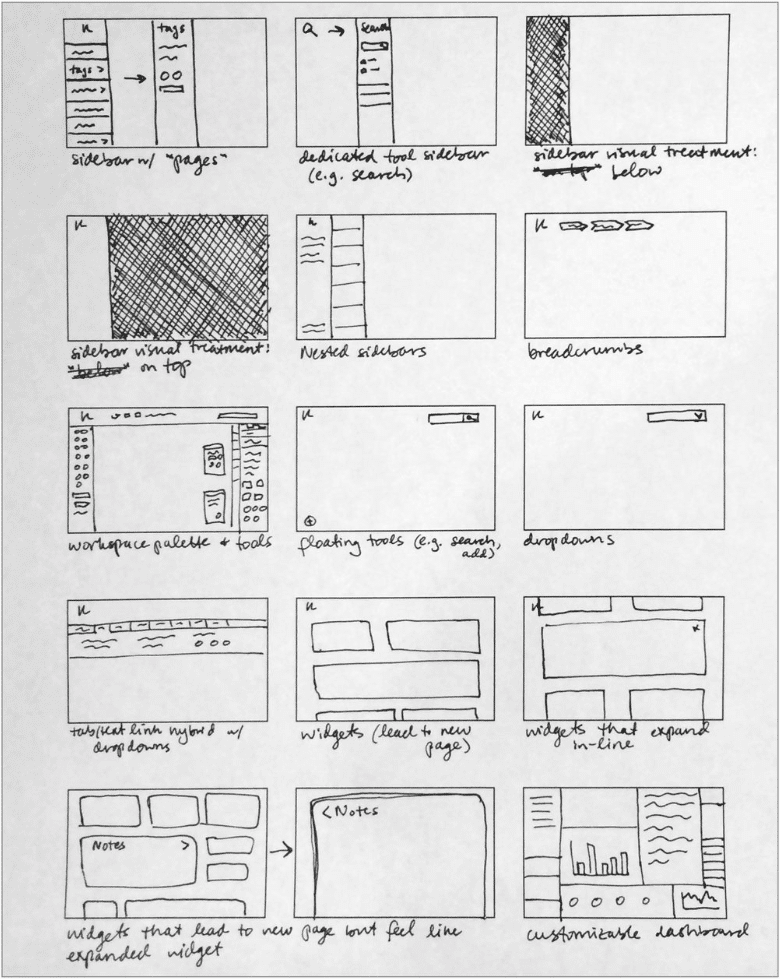

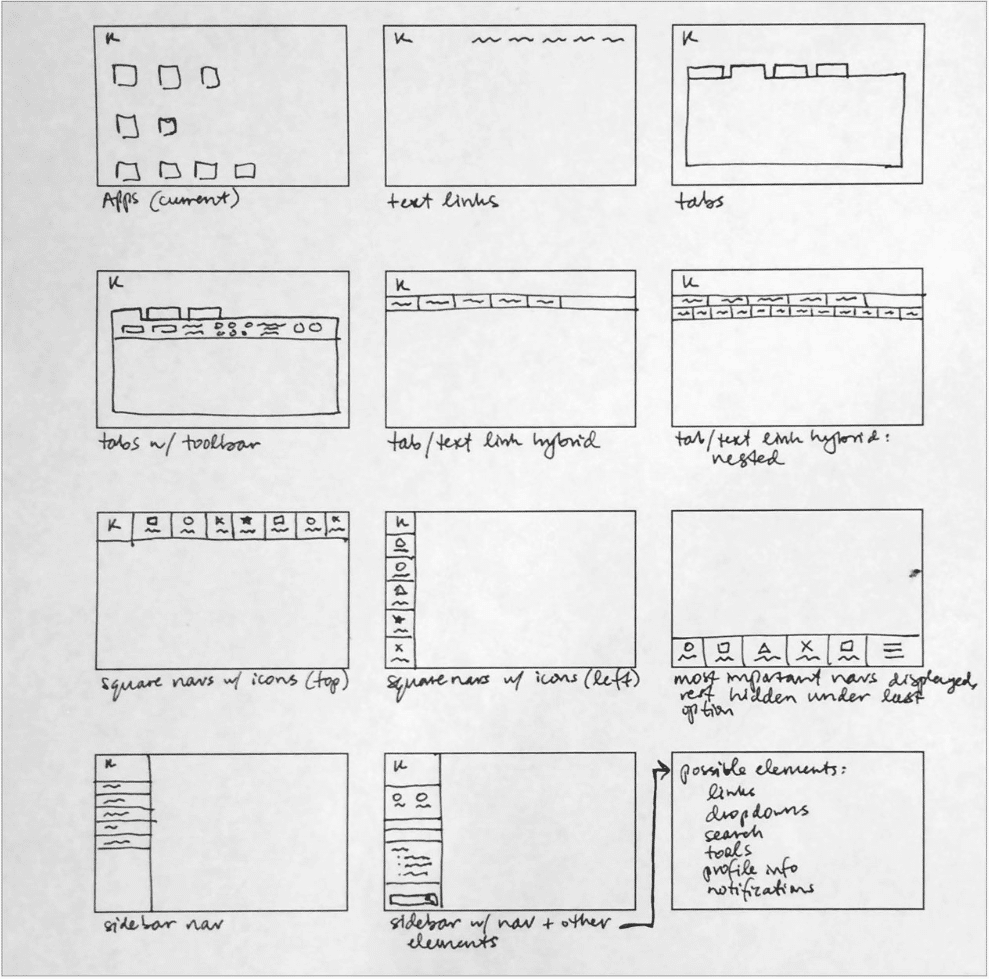

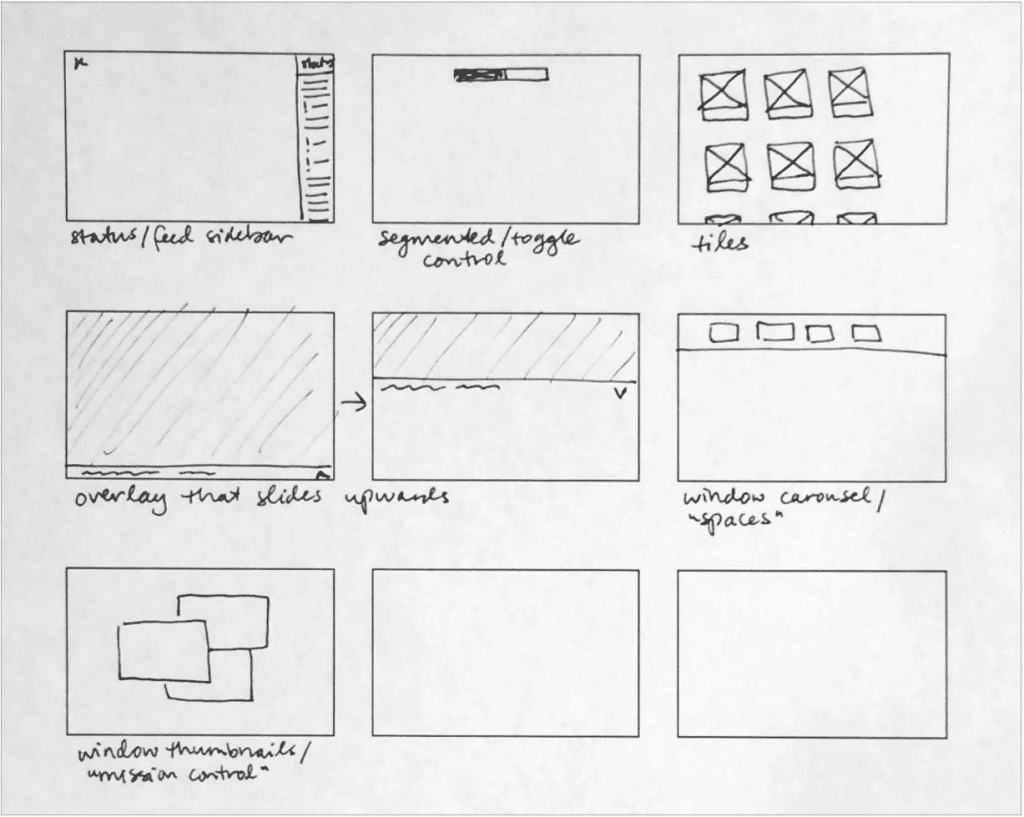

UI Concepts & Ideation

Wire-framing & Prototyping

Usability Testing

Development

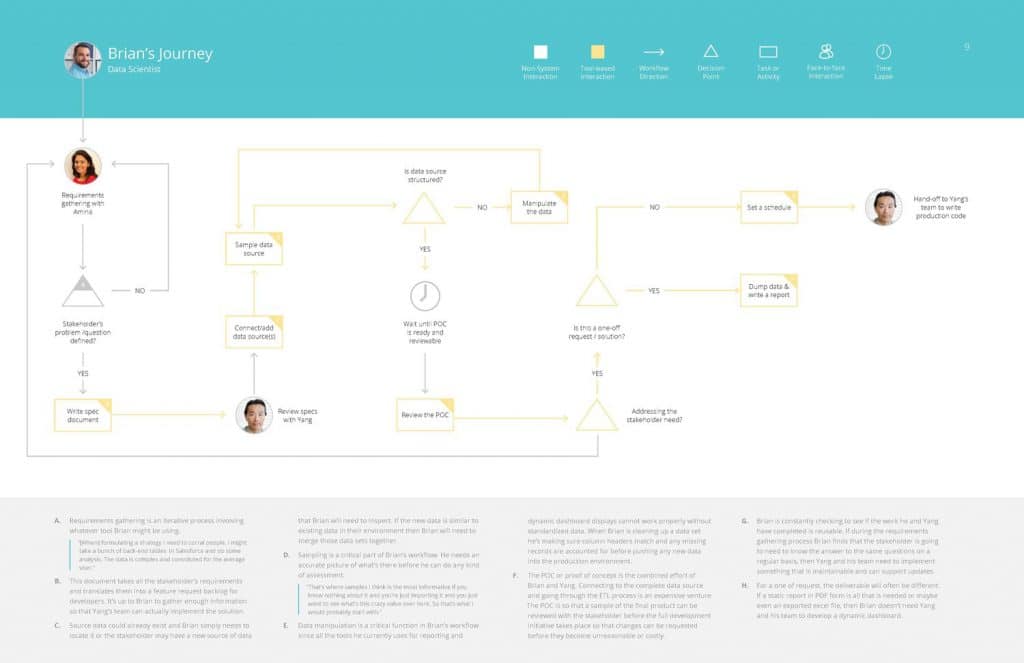

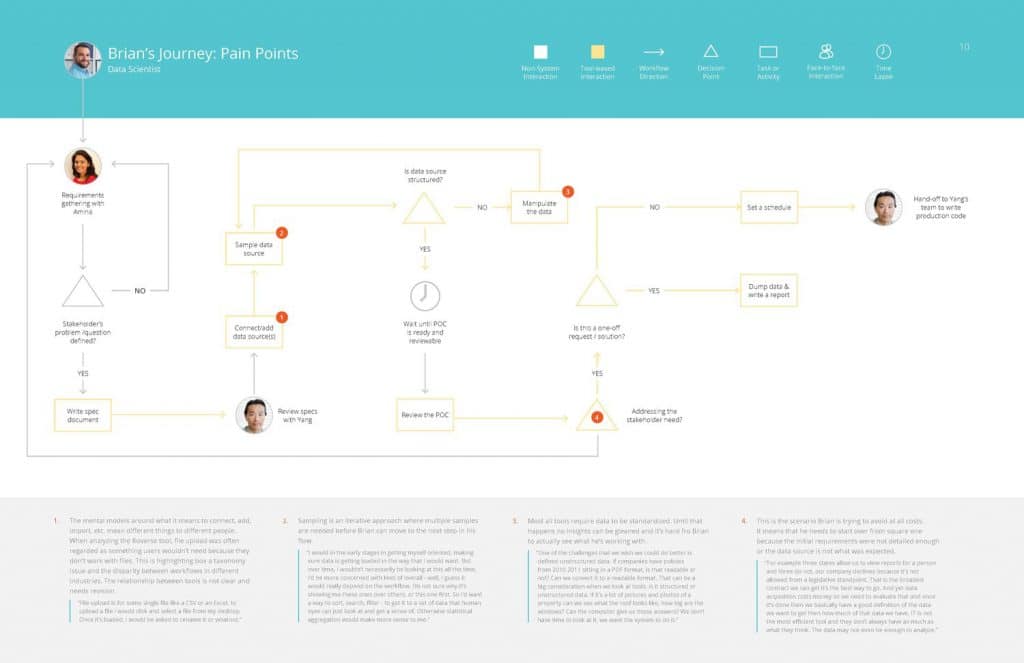

User Research, Personas & Journeys

USER INTERVIEWS

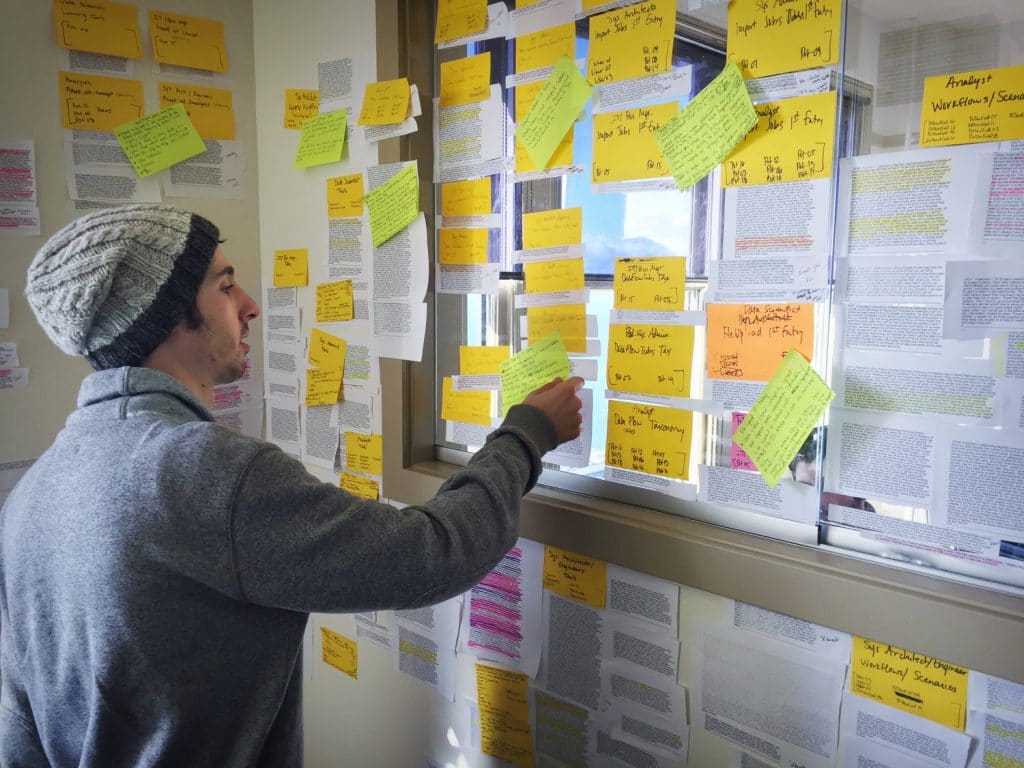

Because none of us on the design team were data scientists ourselves, we needed to talk with data scientists to understand their mental models around processing data, and what workflows were more or less common to people in the processing stage.

We spoke with 18 people with job titles ranging from Data Analyst to Business Managers, and then sorted what they told us into common groupings using affinity diagram sorting.

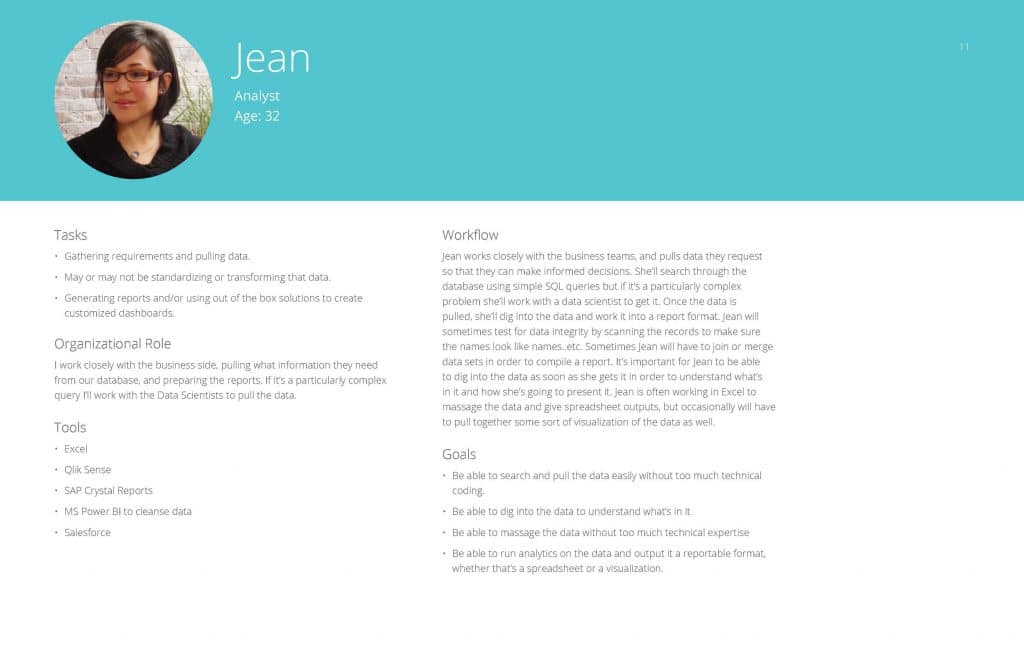

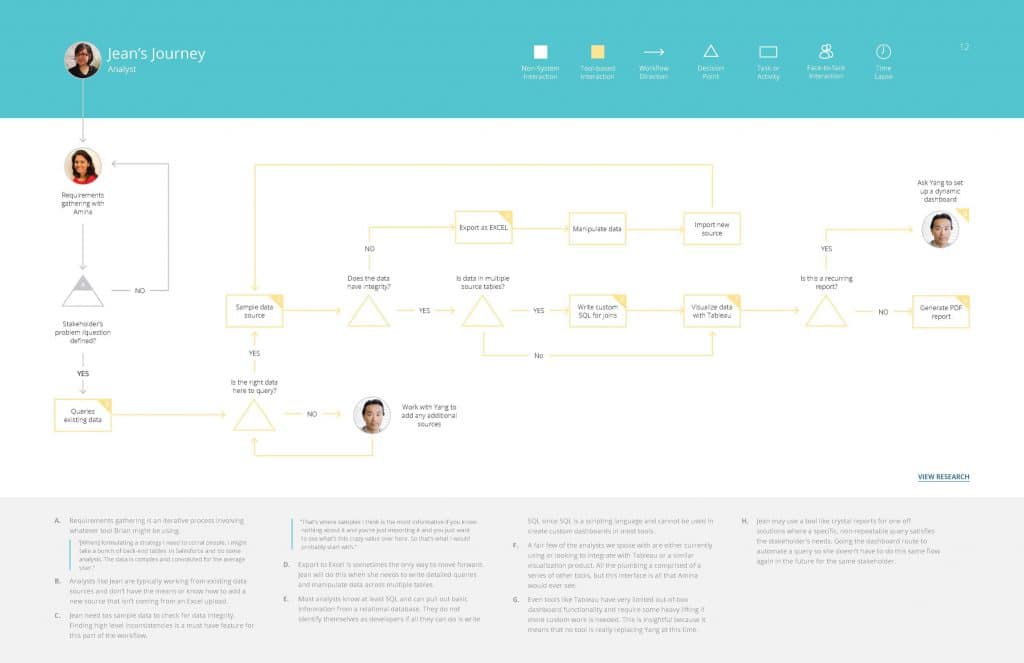

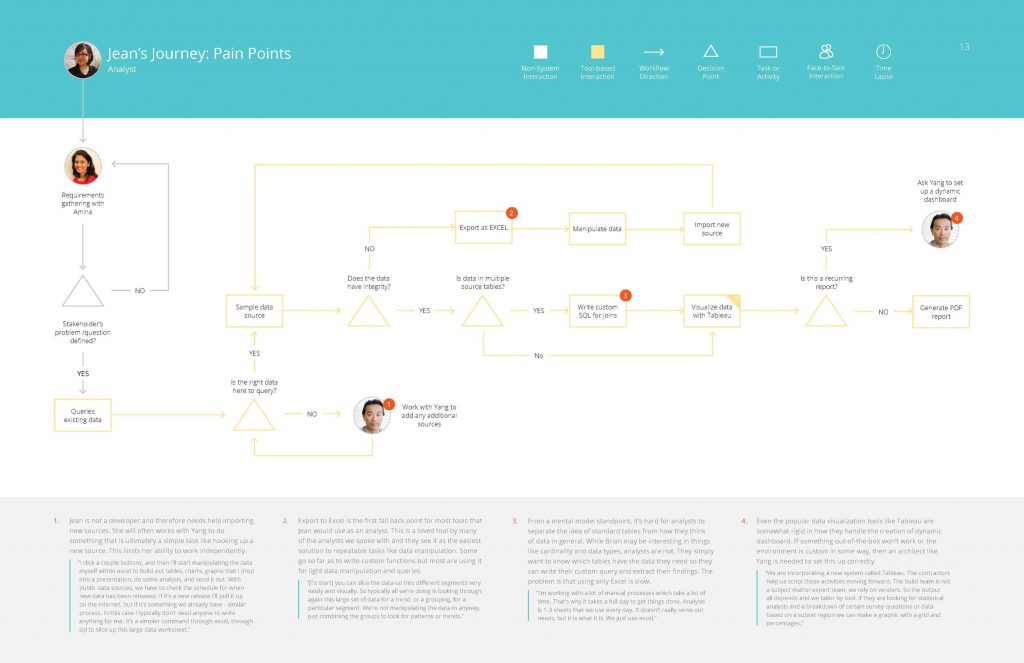

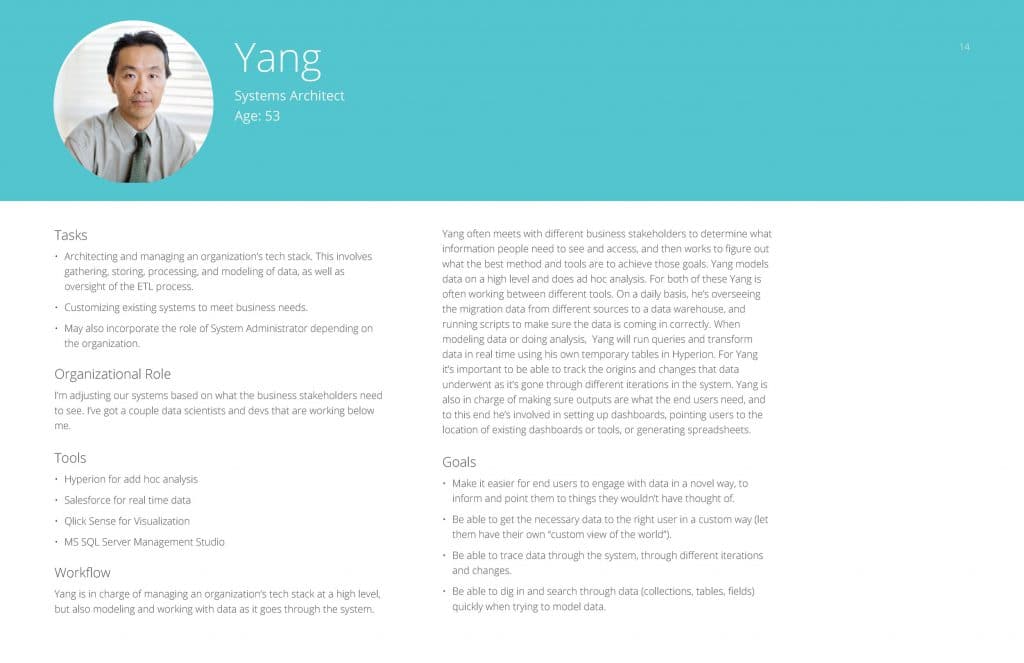

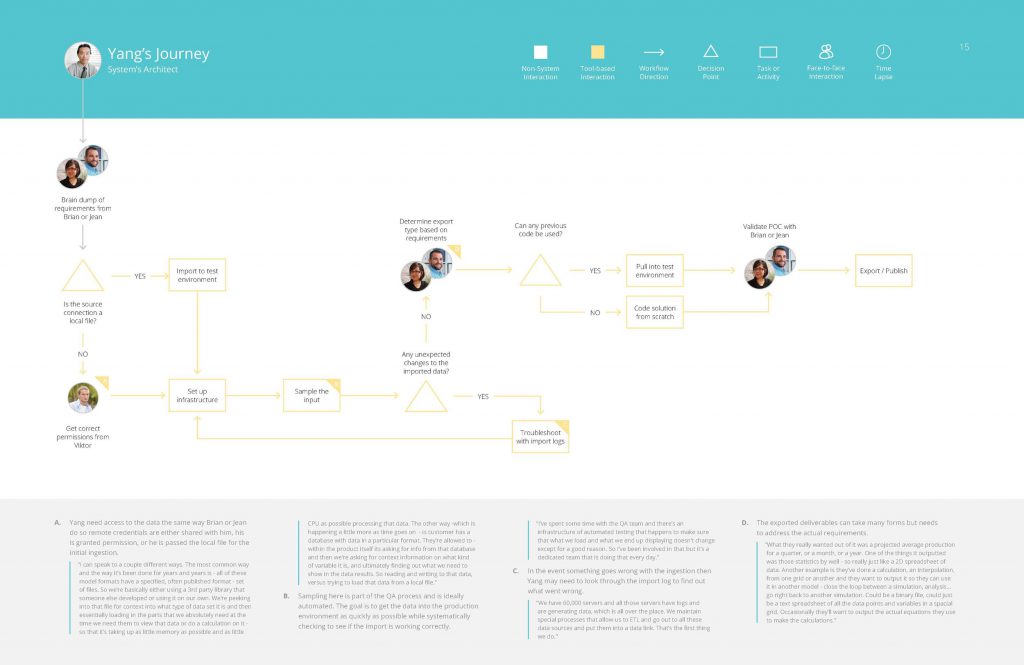

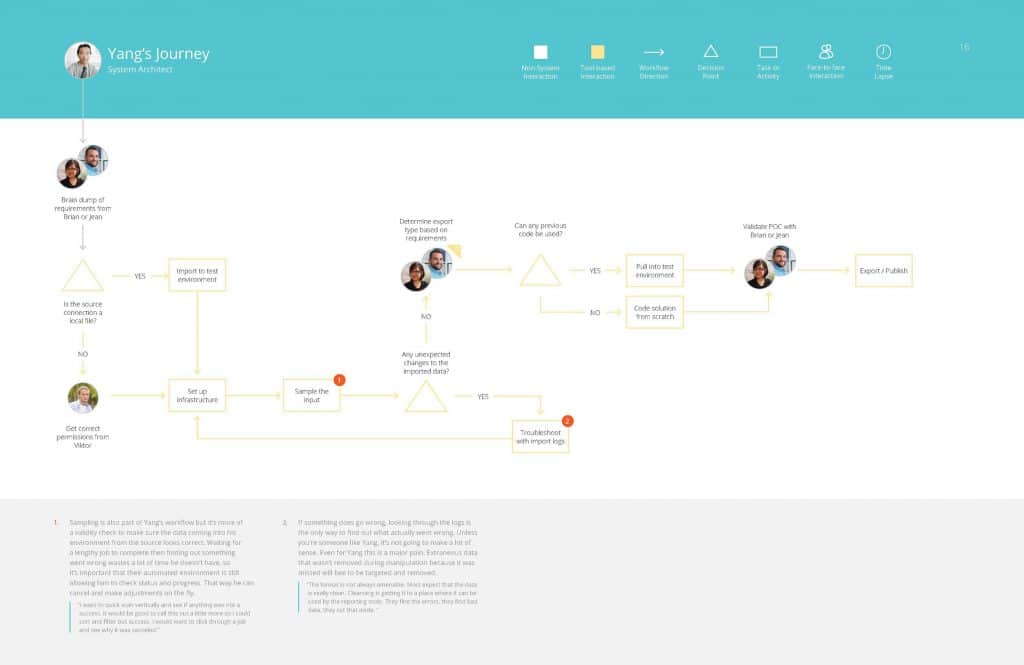

PERSONAS & JOURNEYS

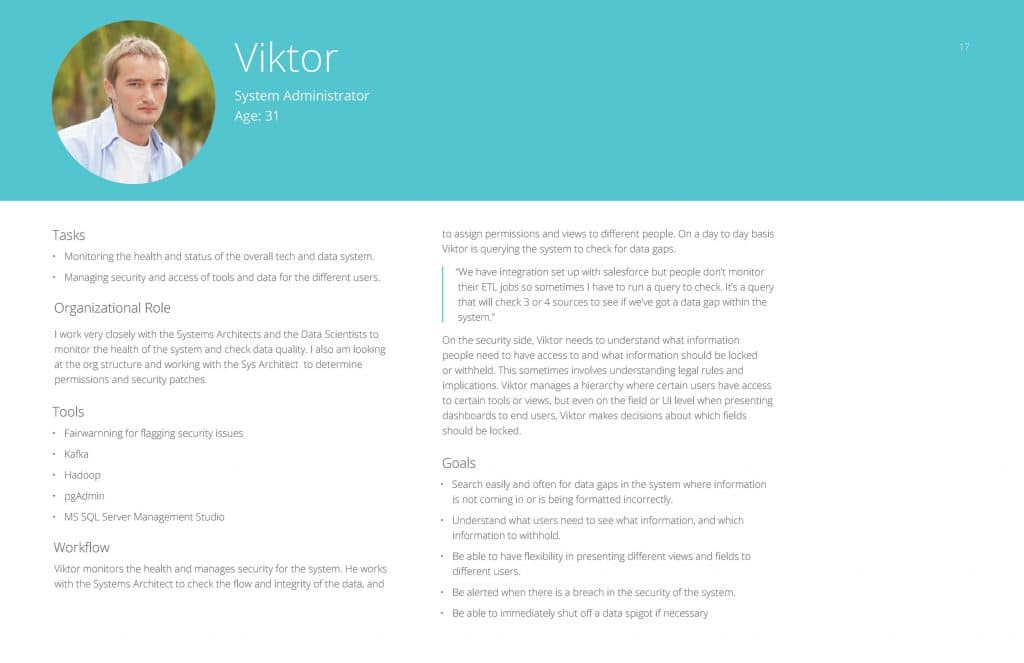

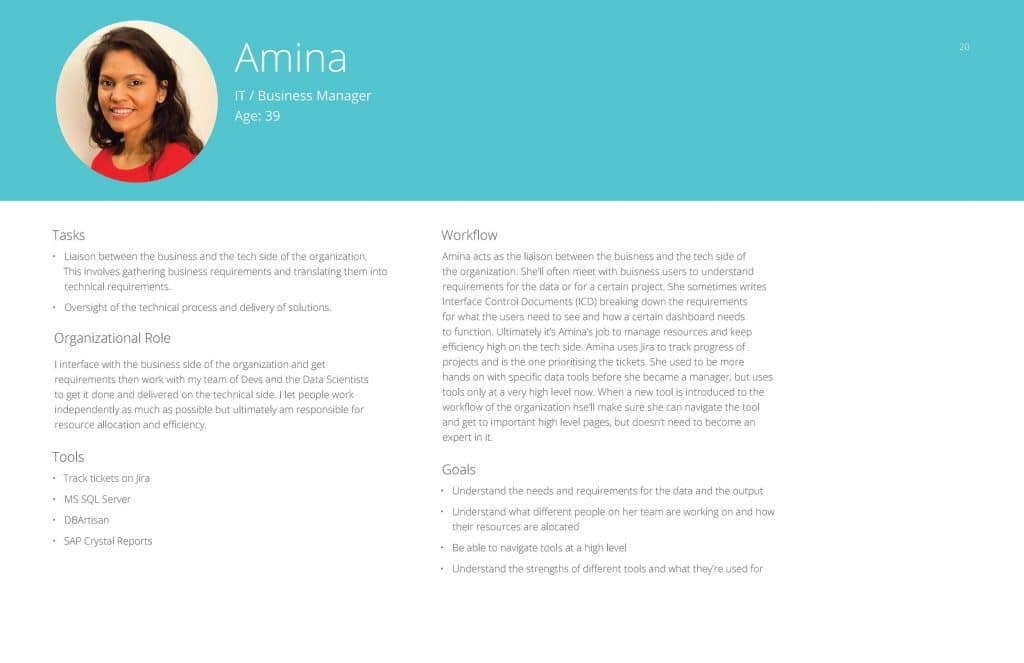

The affinity diagram sorting allowed us to group common responses into themes, and to see how the people with different job titles and roles all thought collectively about the data processing part of their jobs.

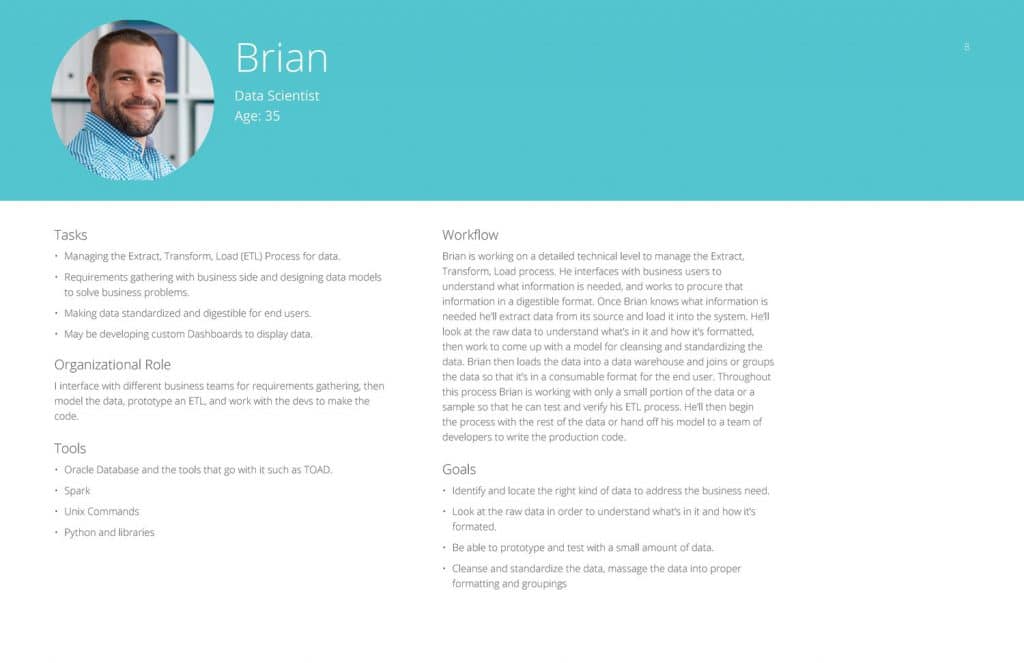

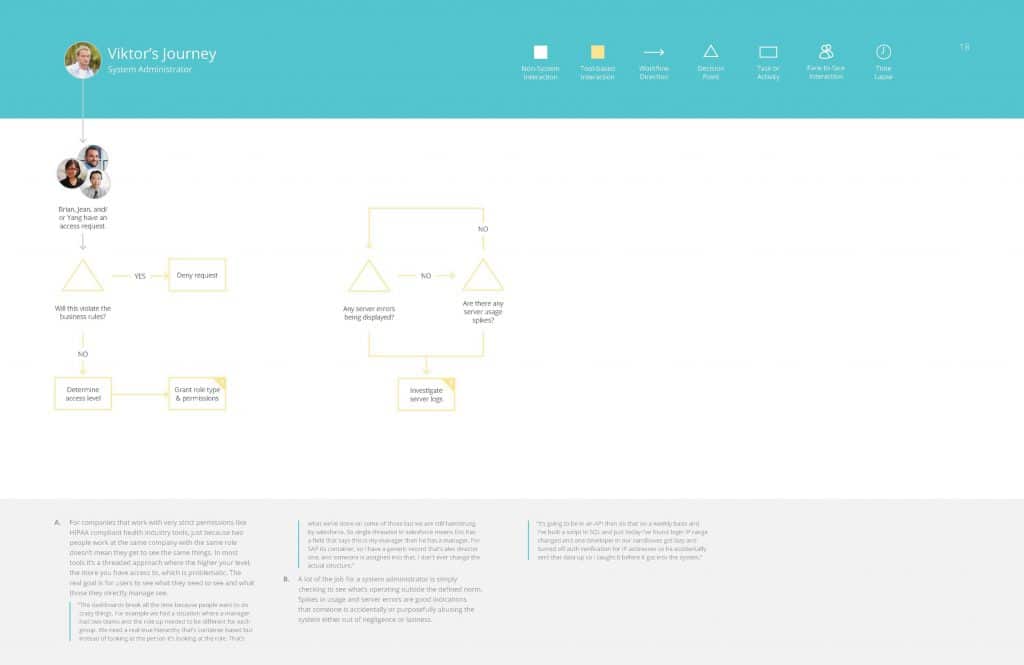

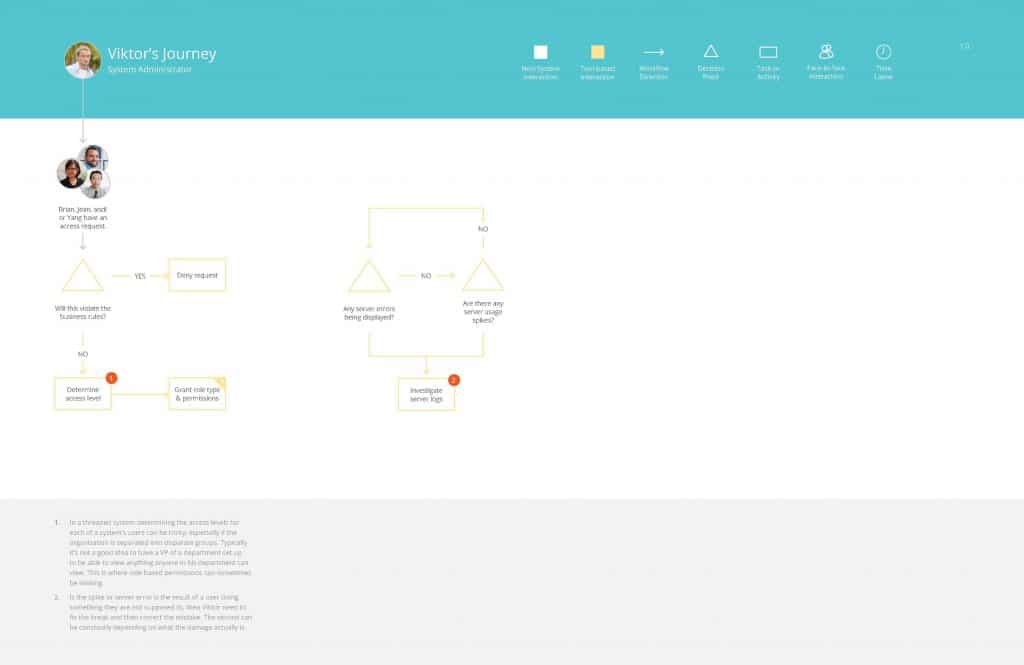

From these groupings of responses, and different workflows people had walked us through, we were able to assemble in depth personas for the different roles of people who would likely be interacting with this tool.

Brian – Data Scientist

Jean – Analyst

Yang – Systems Architect

Victor – Systems Administrator

Amina – IT / Business Manager

Information Architecture / Flow Diagramming

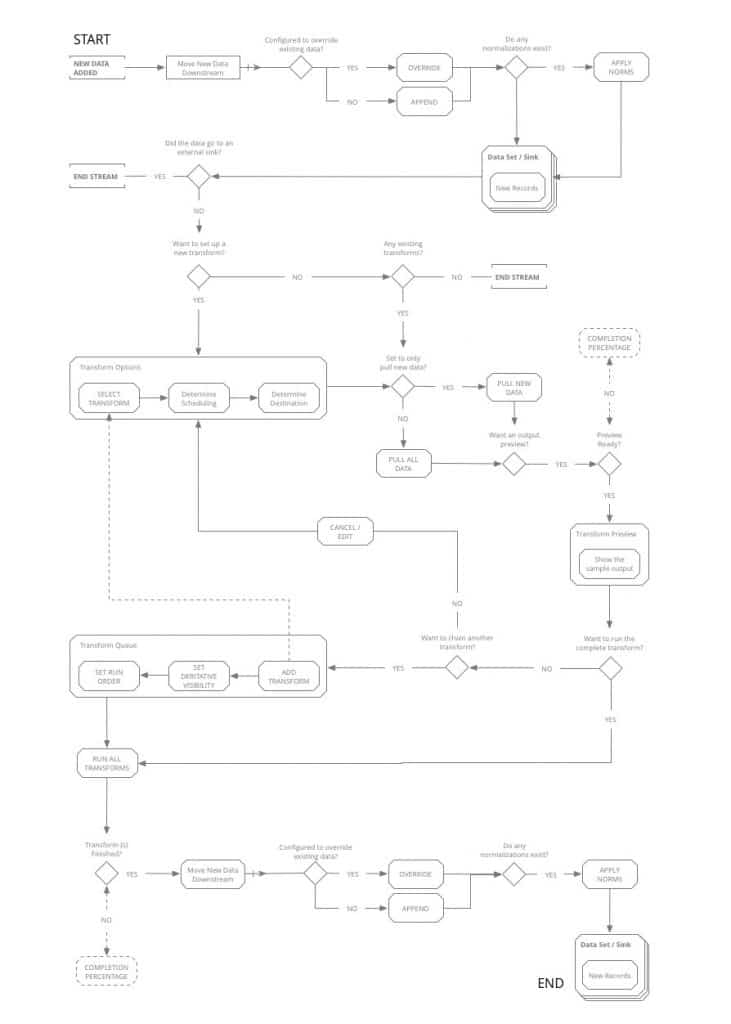

FLOW DIAGRAMS

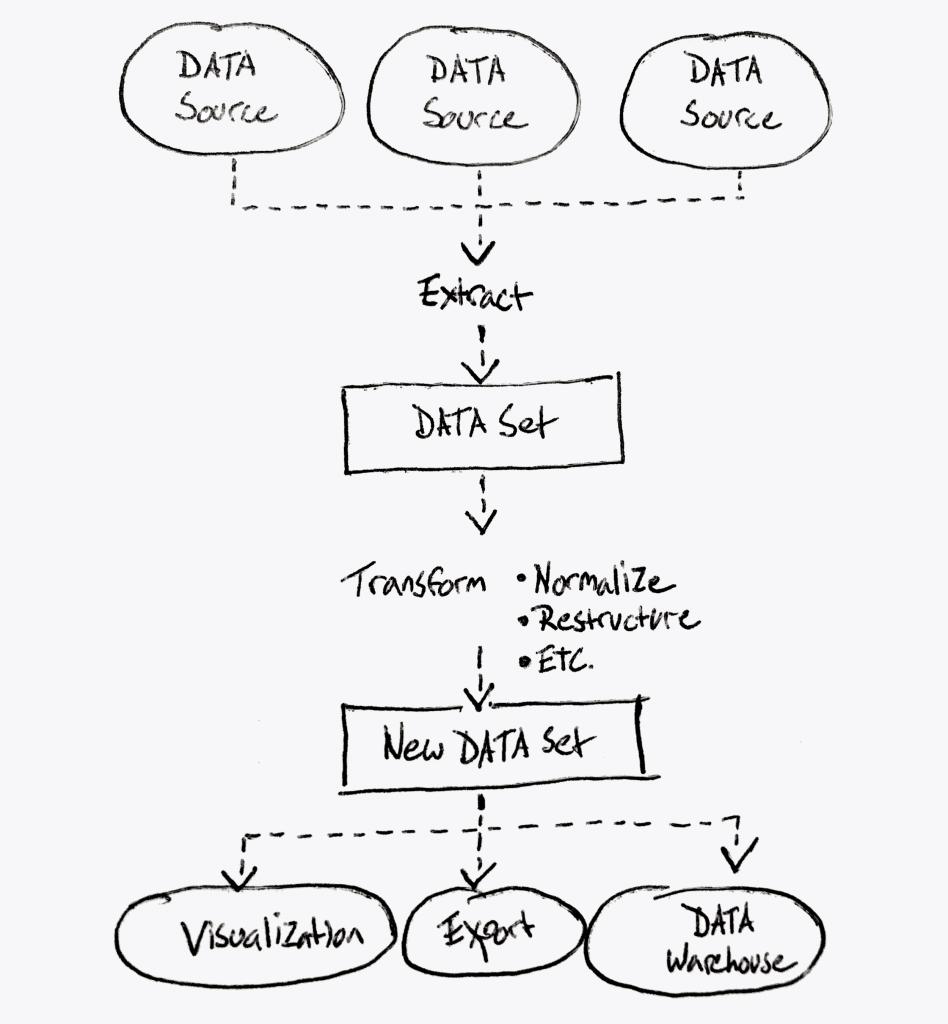

Using our persona journeys and competitive analysis as guides, we set to work mapping out the workflows that would become the main paths the new tool would be designed around.

With a tool this complex, and solving for such a complex set of workflows, it was imperative to diagram out the functionality and the main goals the users would be trying to achieve before jumping into screen level design.

Wireframing & Prototyping

CONCEPTS & WIREFRAMING

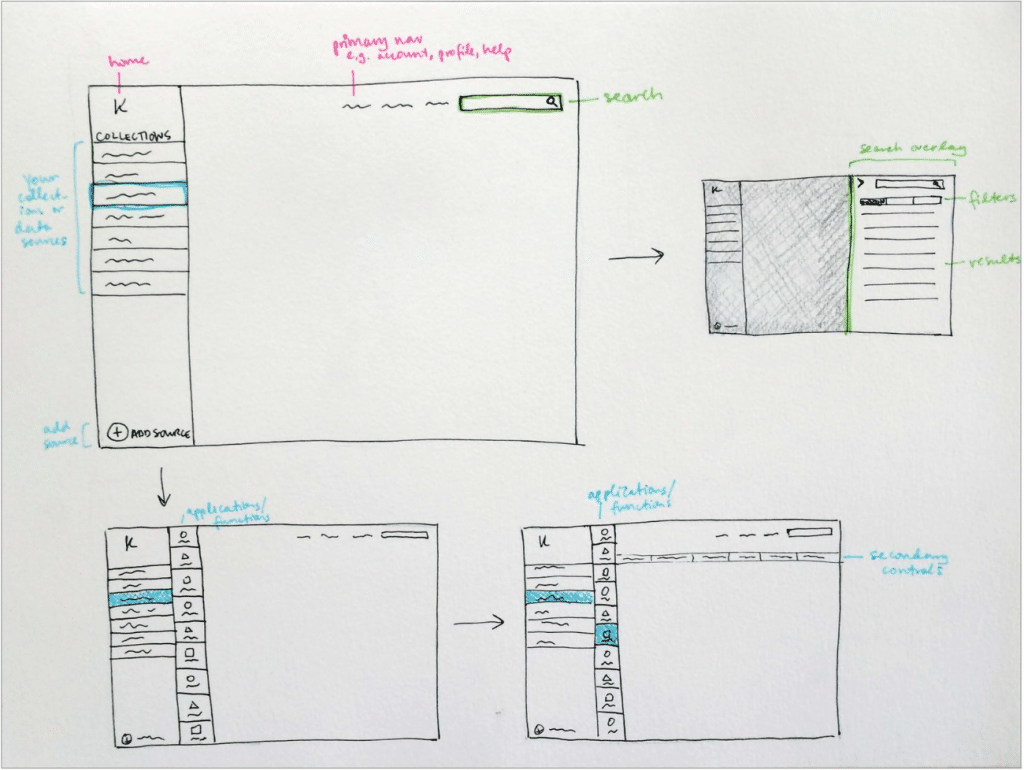

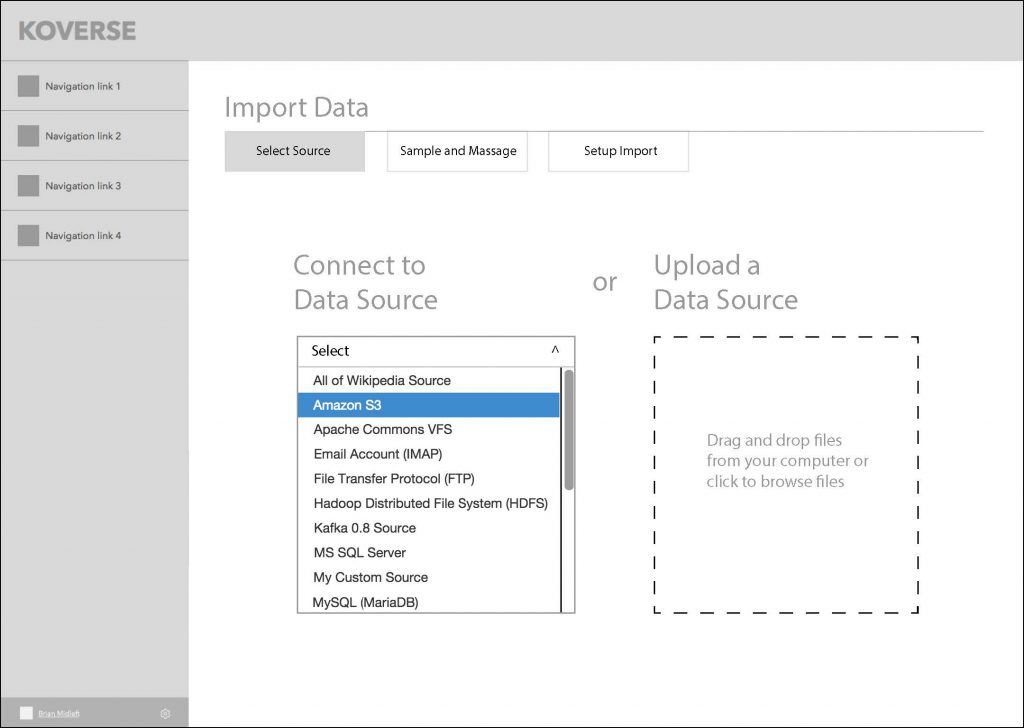

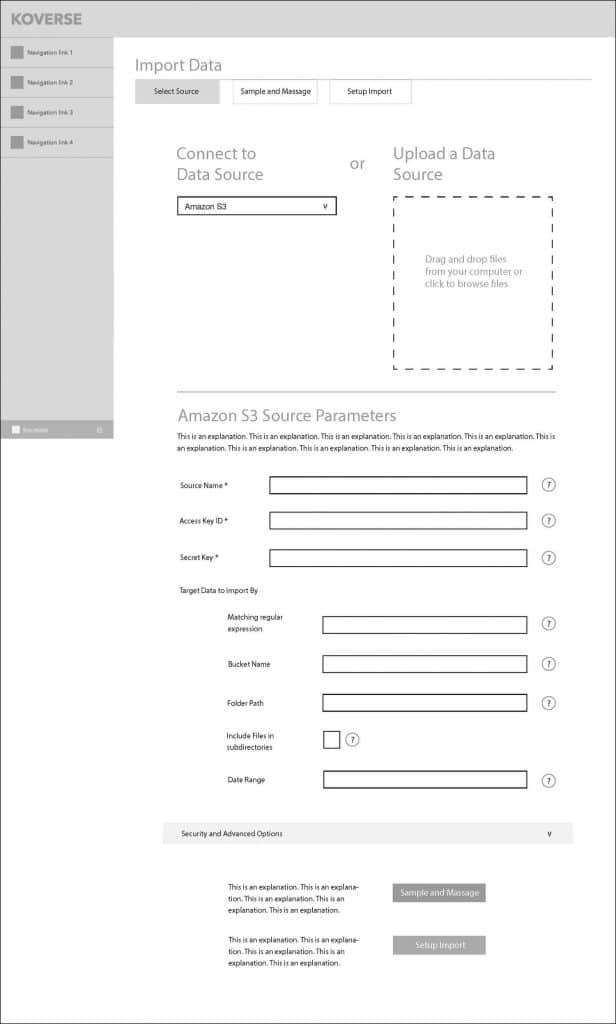

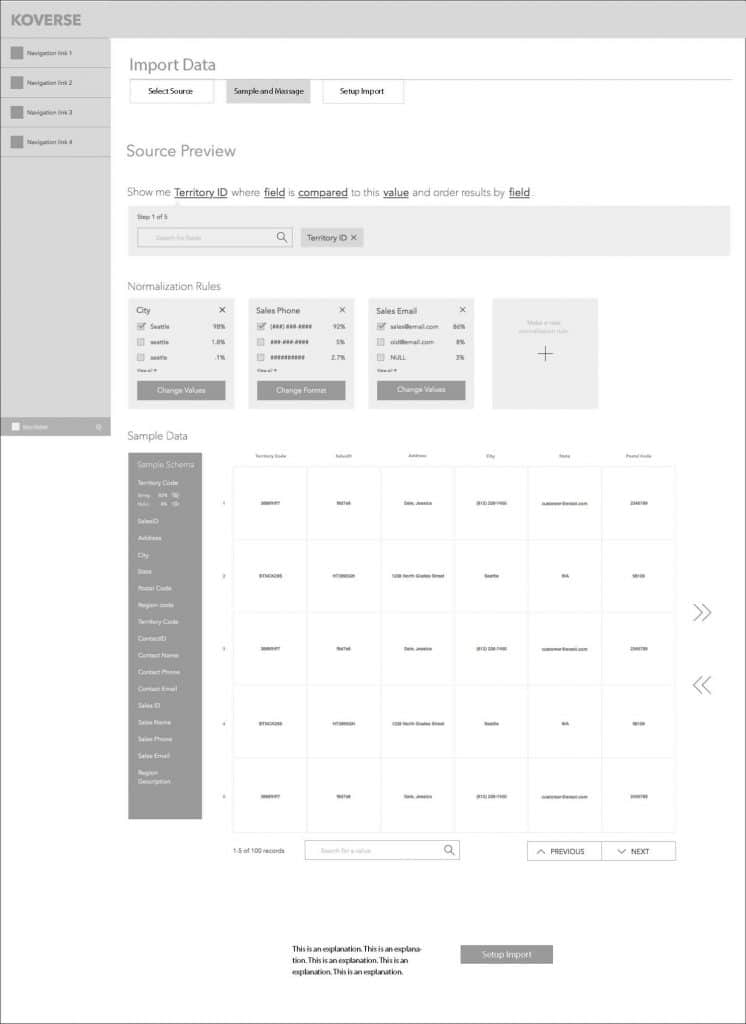

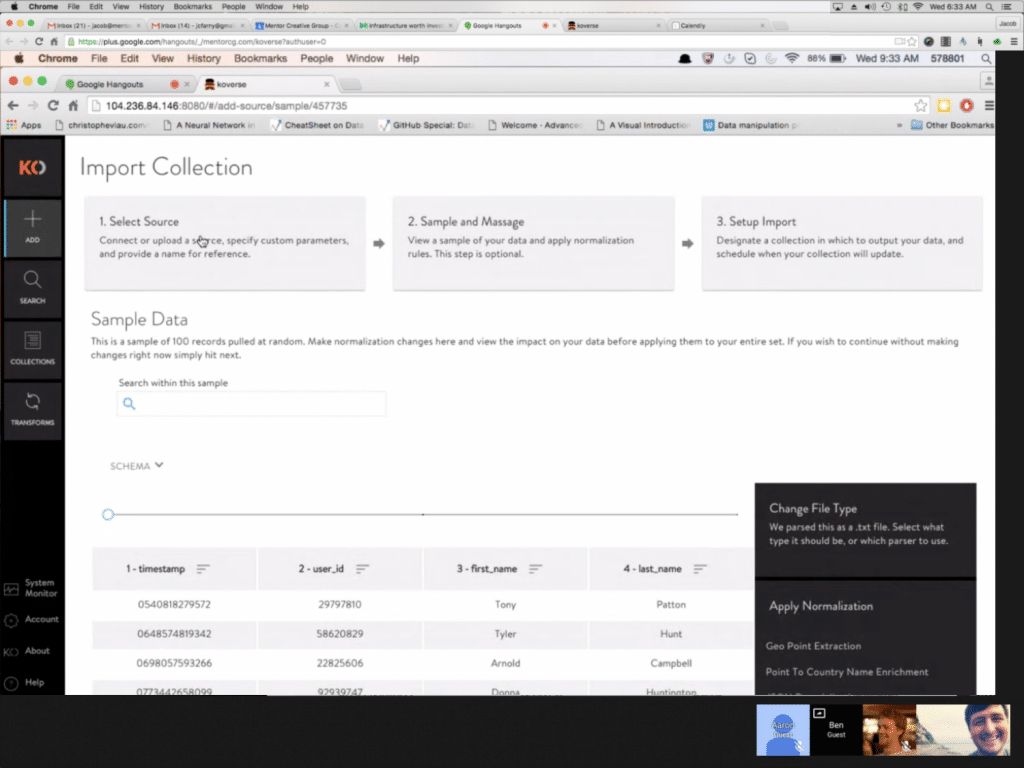

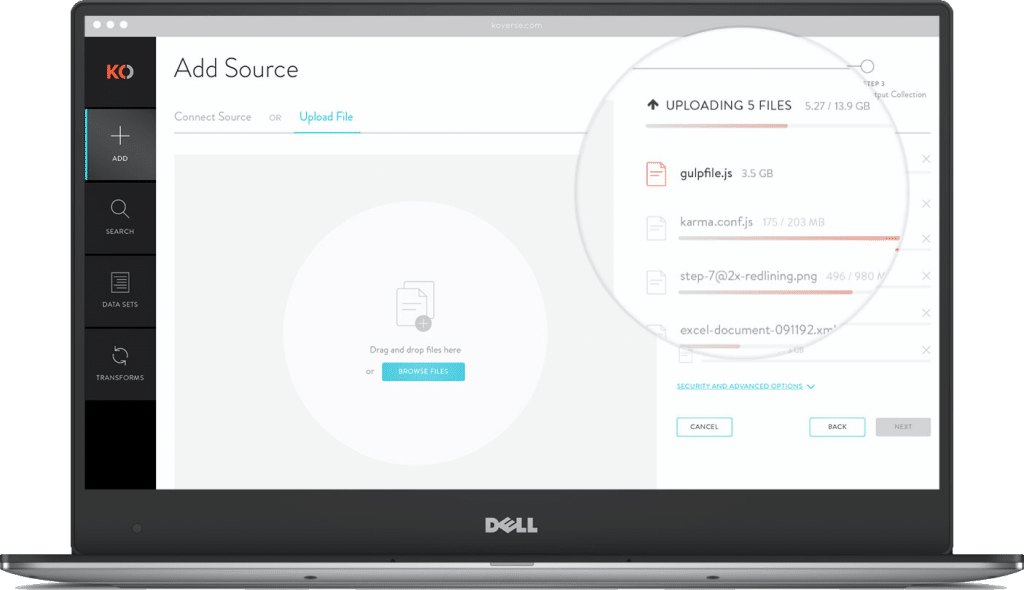

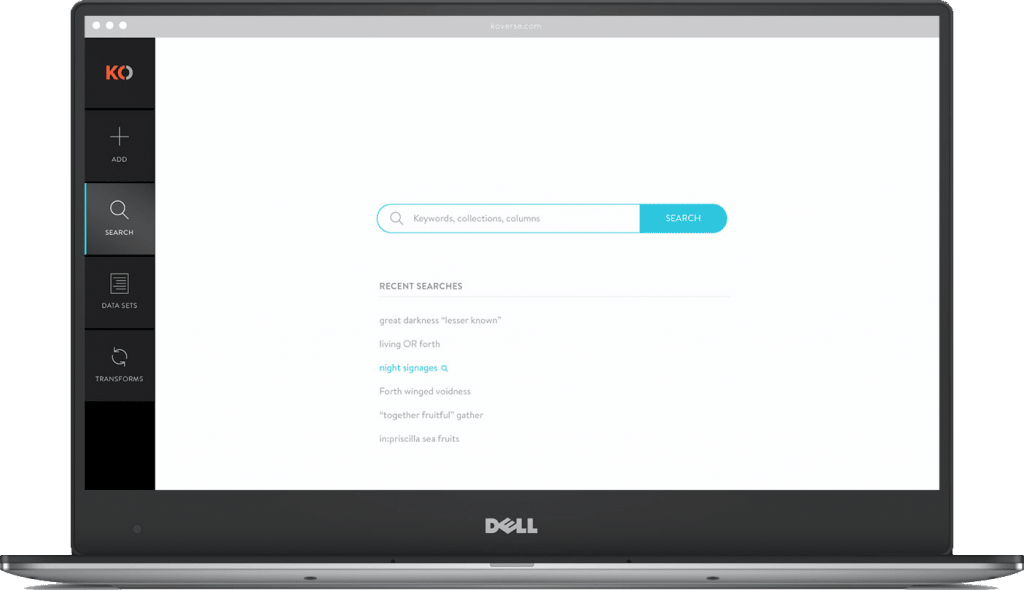

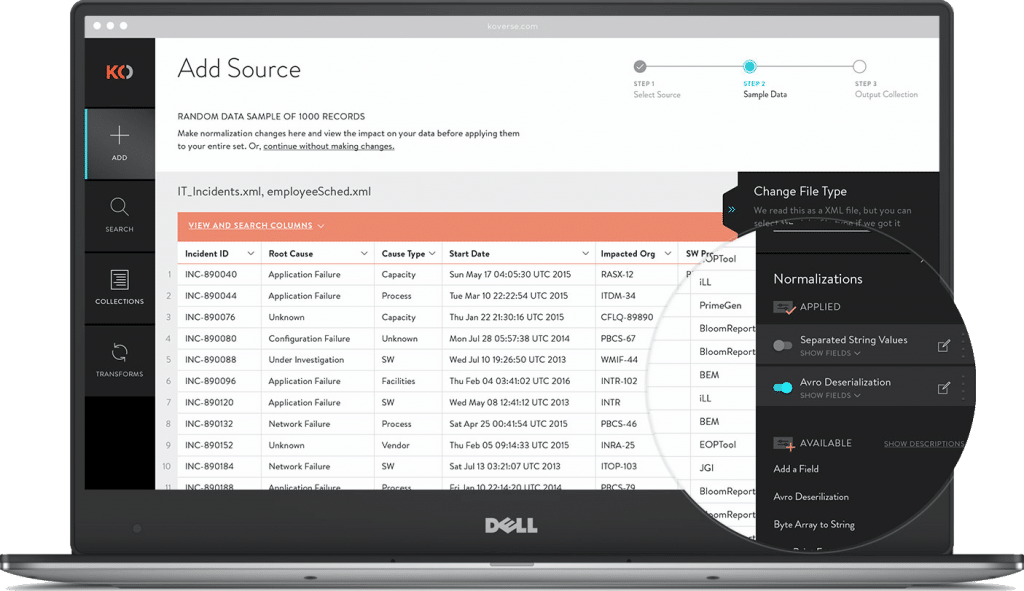

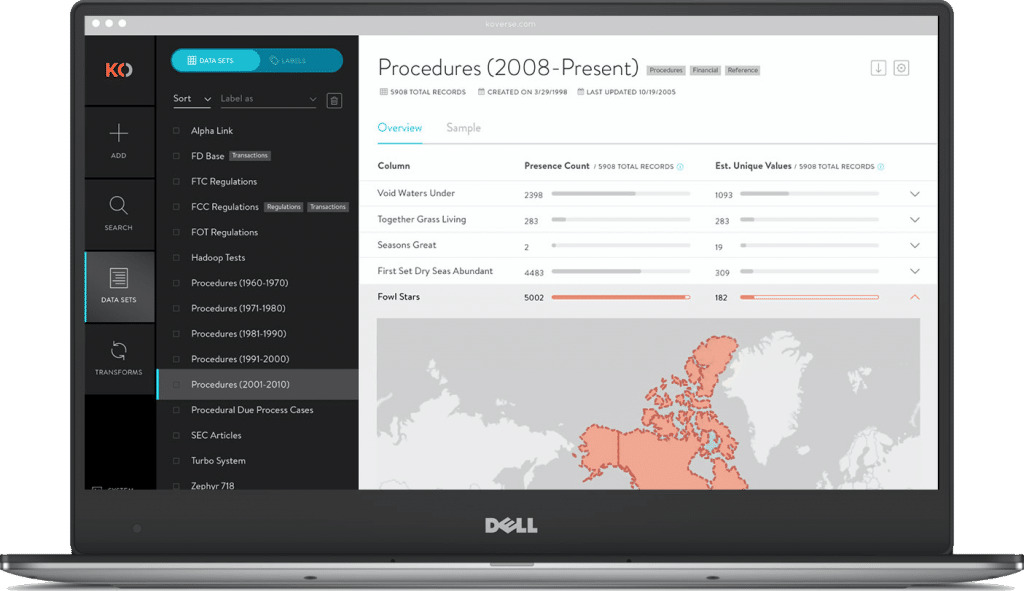

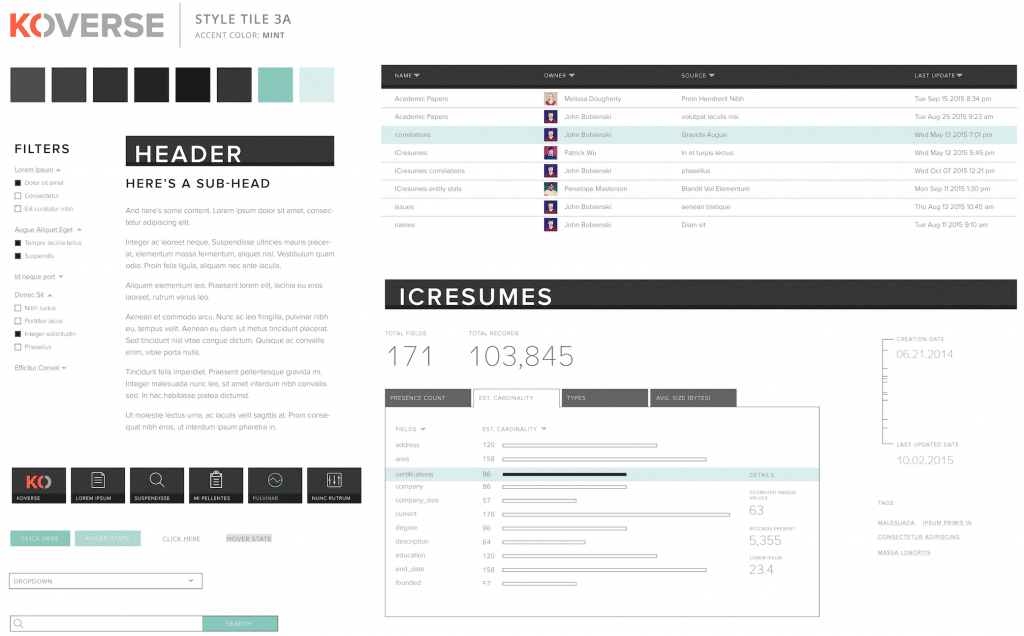

We settled on a left rail menu paradigm that would keep context continuity throughout the tool, and a main workspace where the user could go step by step through the data processing workflow.

This series of screens shows the concept of a three-step wizard flow for importing data into the platform.

GOING DEEPER WITH COMPLEX TRANSFORMS

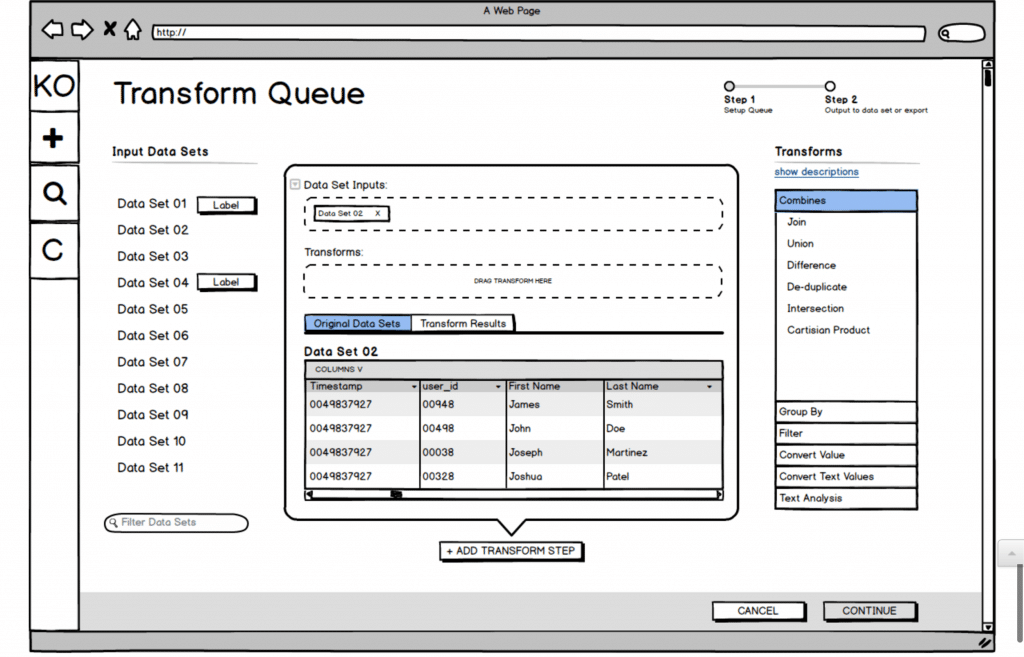

One of the big challenges with the Koverse interface is that the tool allows for transforms of the data (rules for how to systematically manipulate the data) to be chained together. These transforms could be selected from a list of common transforms, or written custom in Python.

With many data transforms in a row, the data flow becomes very complex to think about and control.

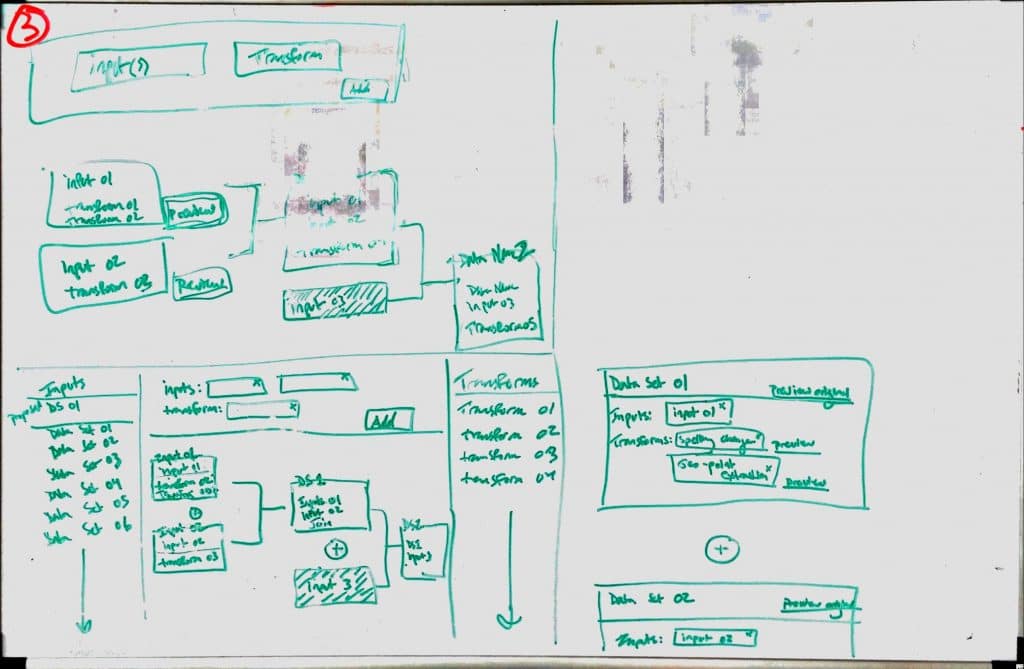

We did multiple rounds of sketching for the transform workflow, trying to make the experience intuitive and cut down on the vast cost of trial and error with large data sets. Here is one sketch and a Balsamiq wireframe exploring the interface we ultimately landed on for the design.

Development

TO CODE

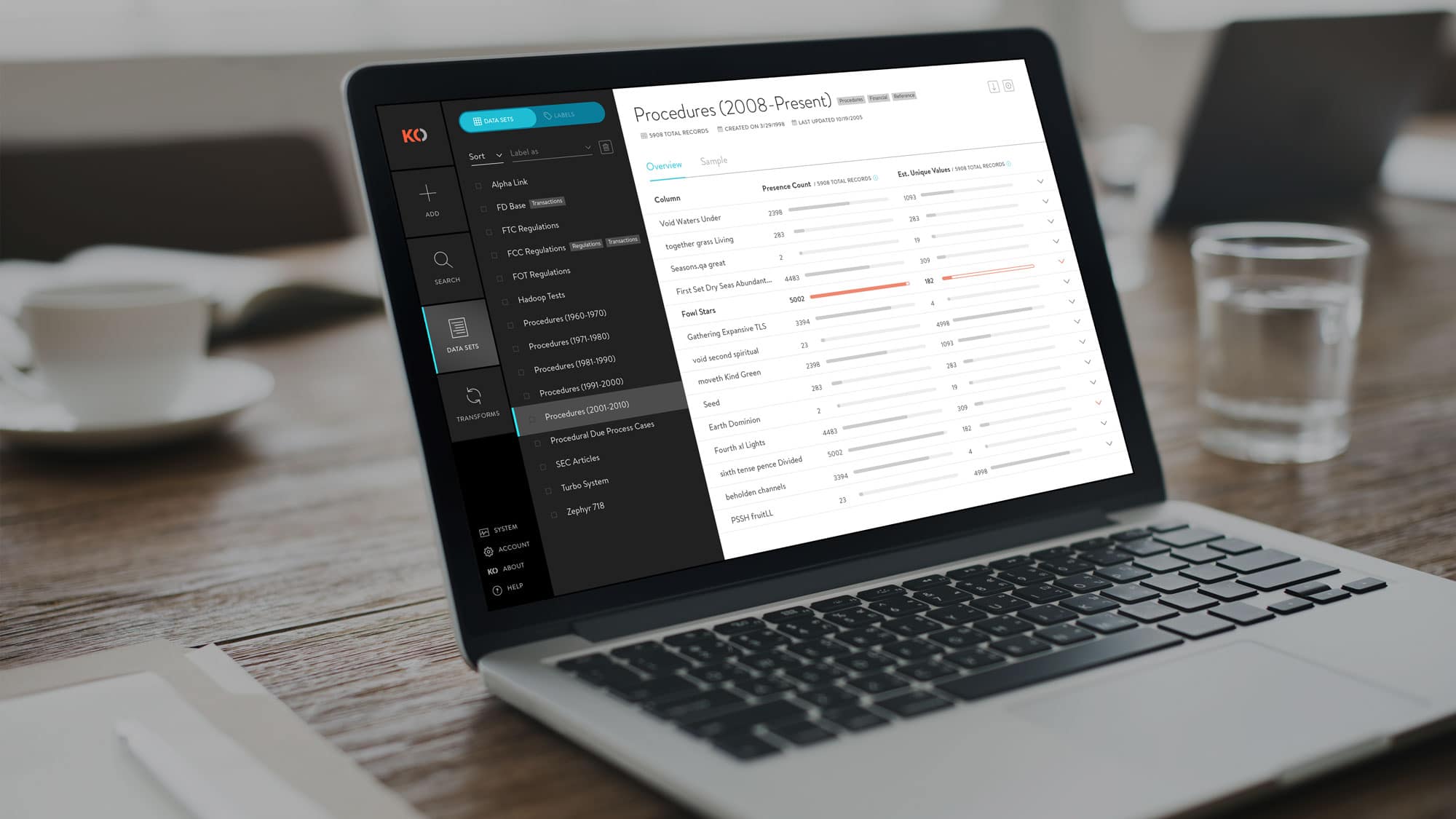

Once the core elements of the design were wireframed, we went into a more ‘sketch to code’ phase where the prototype was being built in code and running with dummy data.

The prototype was built with Angular JS Material, and we tried not to stray too far from the default components for the sake of time and efficiency. Working this way allowed us to finish each sprint we were working on with fully functional code that Koverse could then use or test further.

As the design and prototype was being worked out and built, the visual designer on the team was working on a parallel path to style the interface from a component standpoint and provide a comprehensive style guide as trailing documentation.

Ready for More?

View Another Project –

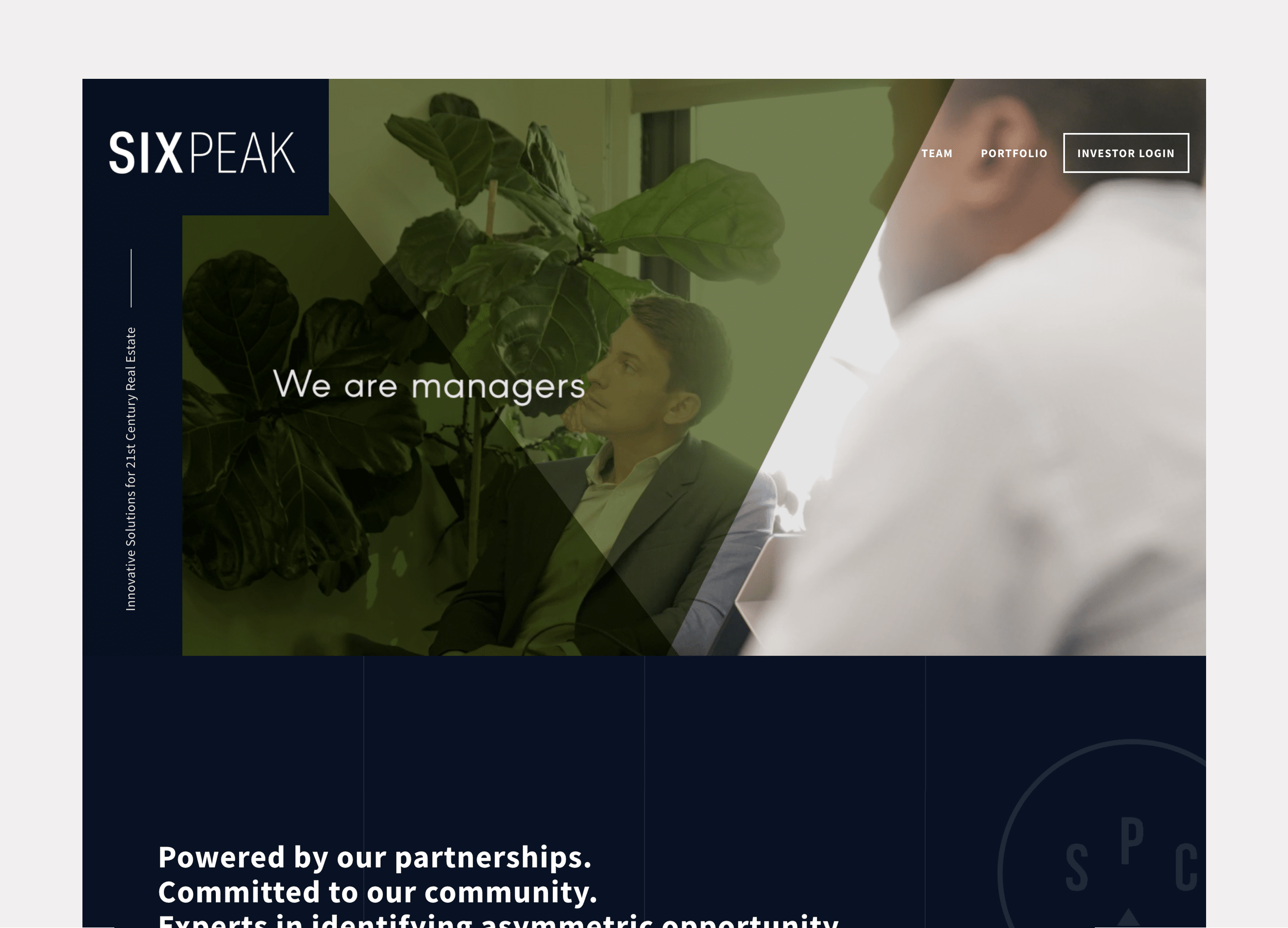

Website Design

Website design for a real estate investment & development company focused on coliving.

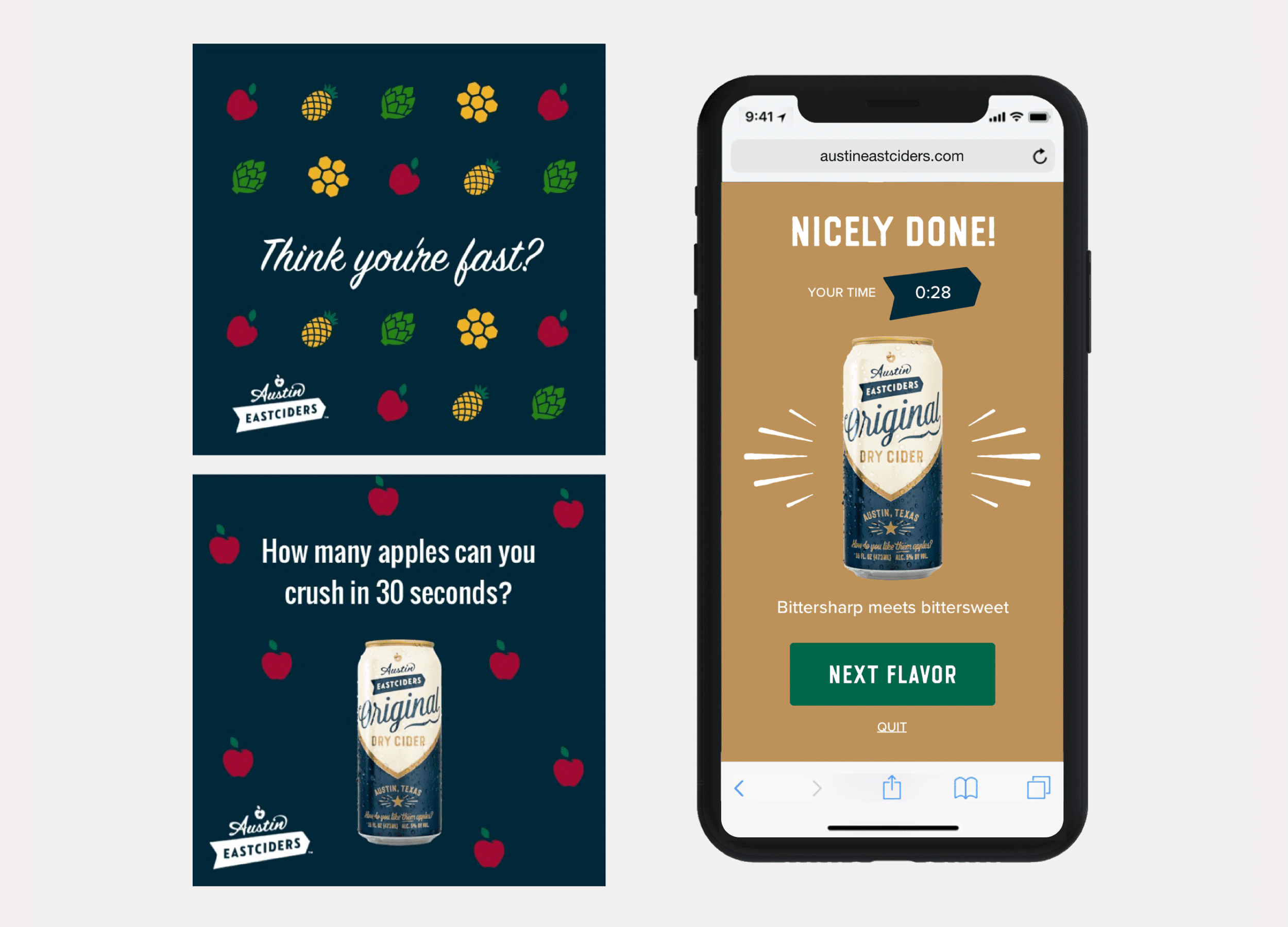

Interactive Promotional Game

A mobile game distributed through social media and played in the browser. Designed to promote four different flavors fo hard cider.